36. Reinforcement Learning

This section shows how to use AGX dynamics with Gymnasium-style environments to train reinforcement learning (RL) control policies.

You will learn how to:

Wrap an AGX simulation as a Gymnasium environment

Run and visualize example environments

Train controllers using popular RL libraries

36.1. Installation

Install required Python packages:

pip install -r data/python/RL/requirements.txt

Note

We do not support platforms with python versions < 3.7.

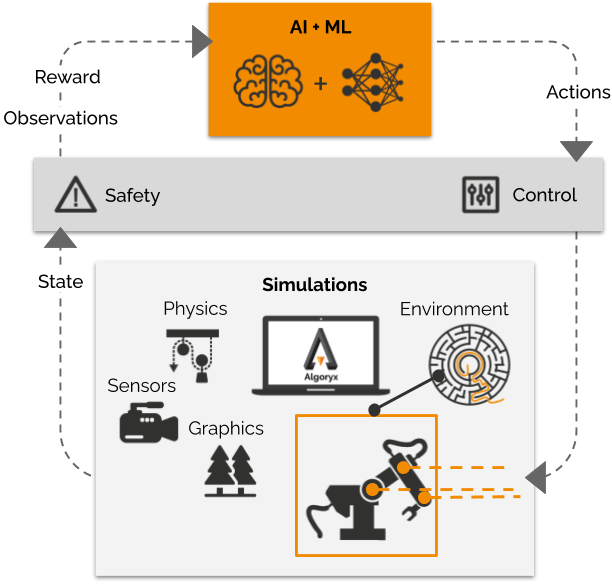

36.2. AGX gym environment

An AGXGymEnv inherits from Gymnasium.Env and provides:

Implementations of the API methods

step()render(),close(),reset()Custom hooks for:

_build_scene()- Sets up the AGX dynamics simulation (environment).

_set_action()- Passes the action to the controller. Called instep().

_observe()- Returns(obs, reward, terminal, truncated, info). Called inenv.step().Optional camera rendering and graphics.

36.3. Training a control policy

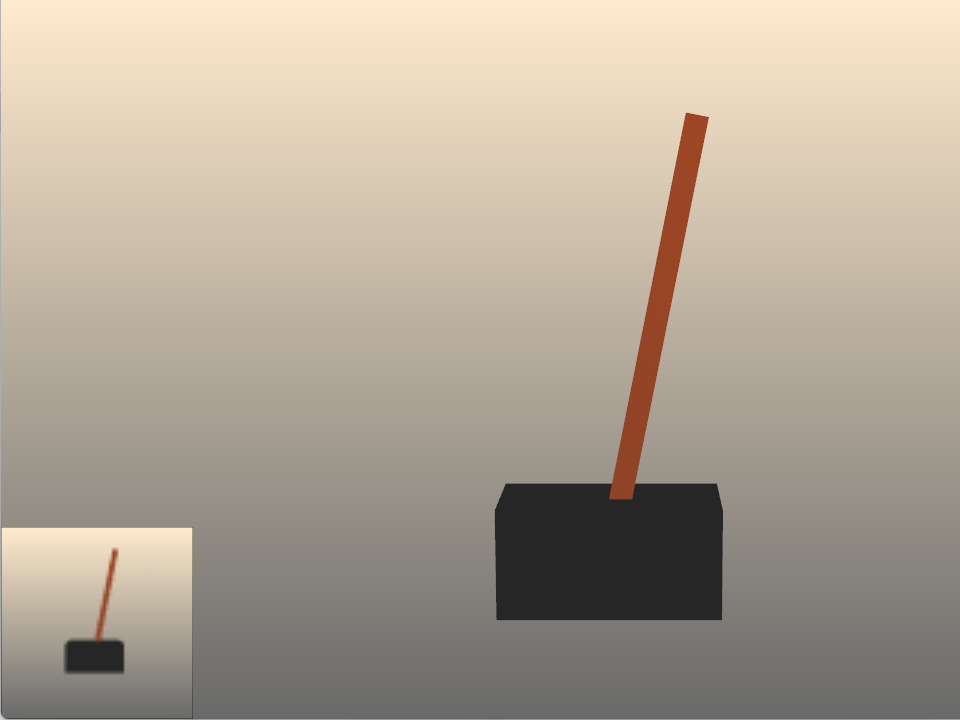

These examples use an AGX implementation of the classic reinforcement learning problem called cart pole, where the goal is to balance a pole on a moving cart. As opposed to classic CartPole, our environment uses continuous actions and the observation space is either the standard state, or visual input from a virtual camera. We show how to train a control policy using stable-baselines3 and run trained control policies.

Run the CartPole environment with a random policy:

python data/python/RL/cartpole.py

Train a control policy from scratch:

python data/python/RL/cartpole.py --train

Train using visual observation:

python data/python/RL/cartpole.py --train --observation-space visual

Train a policy on 4 parallel environments:

python data/python/RL/cartpole.py --train --num-env 4 --observation-space visual

Load a control policy:

python data/python/RL/cartpole.py --load path/to/trained/policy

Load one of our pre-trained policies:

python data/python/RL/cartpole.py --observation-space visual --load data/python/RL/policyModels/cartpole_visual_policy.zip

36.4. Environment gallery

To list all environments:

python data/python/RL/run_env.py -l

To run an environment using a random policy, pass the environment ID to run_env.py:

python data/python/RL/run_env.py --env agx-pushing-robot-v0

36.4.1. Classic and Robotics

Environment |

ID |

|---|---|

Classic Cartpole |

agx-cartpole-v0 |

Balances a pole on a cart. |

|

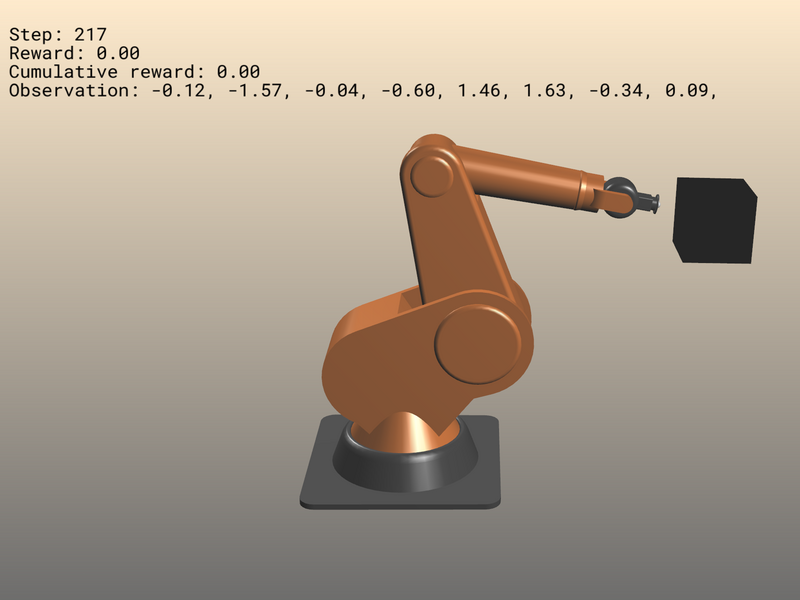

Pushing robot |

agx-pushing-robot-v0 |

The task of the 2DOF robot is to find the box and push it as far away from as possible. |

|

36.4.2. Wheel loading

To quickly get started with more complex tasks using heavy vehicle and terrain iteraction, we have several example environments for wheel-loading that operate on AGX terrain. The environments use different vehicle models but share the same goal; to move soil and rocks using the bucket. Being example environments, these do not represent comprehensive environments with tuned reward functions expected to learn useful control policies. The wheel loaders can be controlled using keyboard, a random policy, or a heuristic pre-programmed policy.

Run an environment using the DL300 wheel loader with a random policy:

python data/python/RL/run_env.py --env agx-dl300-terrain-v0

Run using the WA475 wheel loader on a terrain with rocks using keyboard control:

python data/python/RL/run_env.py --env agx-wa475-flat-terrain-and-rocks-v0 --policy keyboard

Run using the Algoryx wheel loader with a pre-programmed heuristic policy:

python data/python/RL/run_env.py --env agx-algoryxwheelloader-terrain-v0 --policy heuristic

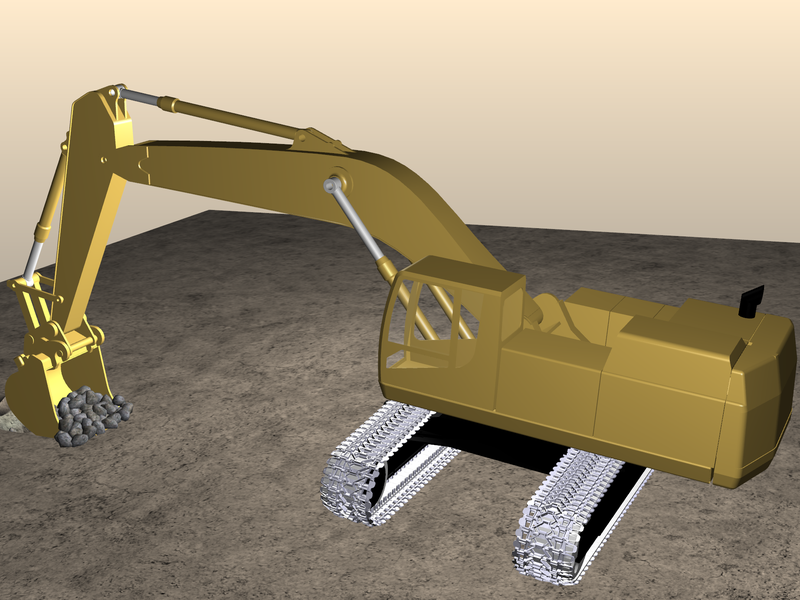

36.4.3. Excavation

Environment |

ID |

|---|---|

Excavator 365 |

agx-365-terrain-v0 |

Move soil on a flat terrain using the 365 excavator. |

|