60. Appendix 6: RL with AGX Dynamics¶

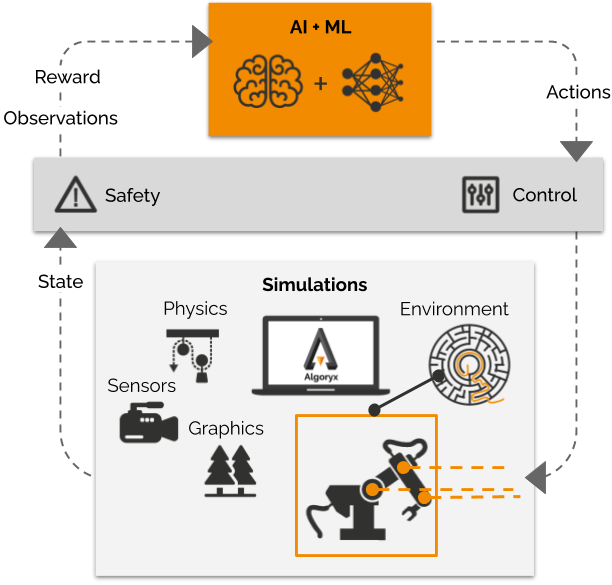

This section shows how to wrap an AGX Dynamics simulation as an OpenAI gym environment, and how to use that environment to train intelligent agents using AGX Dynamics high fidelity physics and popular RL-libraries such as stable-baselines3 or pfrl.

AGX Dynamics gives you reliable nonsmooth multidomain dynamics simulation. It can run faster than realtime, generating all the accurate data you need for training your agents. And easing the transition from simulations to the real life application.

Install the python requirements with the command pip install -r data/python/RL/requirements.txt and test the included examples with the

command python data/python/RL/cartpole.py to run the cartpole example with an untrained policy, or python data/python/RL/cartpole.py --load data/python/RL/policyModels/cartpole_policy.zip to run it with a pre-trained policy.

Note

We do not support platforms with python versions < 3.7.

60.1. AGX OpenAI gym environment¶

An OpenAI gym environment typically contains one RL-agent that can (partially or fully) observe and act upon its surroundings, effectively changing the state of the environment.

Developers of custom environments must implement these main gym environment API methods:

step()- Sets the next action on the agent, steps the environment in time and returns the observation, reward, terminal and optional info.

reset()- Resets the environment to an initial state and return an initial observation

render()- Renders the environment.

close()- Environments automatically close themselves when garbage collected or when the program exits

seed()- Sets the seed for this env’s random number generator(s)

And the following attributes

action_space- The gym.Space object corresponding to valid actions

observation_space- The gym.Space object corresponding to valid observations

reward_range- A tuple corresponding to the min and max possible rewards

The class AGXGymEnv inherits from the OpenAI gym environment. It initializes AGX, creates an agxSDK::Simulation, implements the main gym API methods and cleanup all the resources on exit. Leaving the user of AGXGymEnv with implementing methods for modeling the scene, settings actions and returning observations.

_build_scene()- Builds an AGX Dynamics simulation (models the environment). Much like theBuildScene()function in the python tutorials. This method is called inenv.reset()._set_action(action)- Sets the action on the agent. This method is called inenv.step()_observe()- returns the tuple(observation->numpy.array, reward->float, terminal->bool, info->dict). This method is called inenv.step()._setup_gym_environment_spaces- Creates and sets the action, observation and reward range attributes

If you would like to have a rendered image as an observation you can choose to initialize graphics and use agxOSG::ExampleApplication for some simple rendering. Rendering is controlled by the user.

env = CartpoleEnv()

# This initializes graphics

env.init_render(mode="rgb_array", sync_real_time=False, setup_virtual_cameras)

env.reset()

for _ in range(10):

env.step(env.action_space.sample())

# This renders and displays a new frame

imgs = env.render(mode="rgb_array")

The mode can be "human" or rgb_array. With mode="human" a graphics window is created for viewing the simulation, for headless rendering use mode="rgb_array". Calling images = env.render(mode="rgb_array") will return a list of images from the virtual_cameras in the environment. To create virtual cameras you must implement the method AGXGymEnv._setup_virtual_cameras(). Checkout the agxPythonModules.agxGym.envs.cartpole.CartpoleEnv for an example on how to do this.

60.2. Example environments¶

You can start any of the example environments by running python data/python/RL/run_env.py --env name-of-environment. That will start the environment and control the agent using a random policy. To list the available environments run python data/python/RL/run_env.py -l.

60.2.1. Cartpole environment¶

Run the example with python data/python/RL/cartpole.py. Add the argument --train to train a new policy model. Add the argument --load path/to/trained/policy to load a previously trained policy, either to continue training it or just to demo the results.

The cartpole environment is an example on the classical environment with a cart allowed to move in one dimension. A pole rotating around one axis is attached to the cart. By moving the cart correctly it is possible to balance the pole. This is a example of how to implement cartpole as an AGXGymEnv environment. The observations is the position of the cart, the rotation of the pole, the velocity of the cart and the angular velocity of the pole. And the action is the force applied to the cart at each timestep.

To run the same example but with only a camera as observation, just add the argument --observation-space visual. That will initialize graphics during training, and use a small convolutional network as feature extractor.

60.2.2. Pushing robot environment¶

Run the example with python data/python/RL/pushing_robot.py. Add the argument --train to train a new policy. Add the argument --load path/to/trained/policy to load a previously trained policy, either to continue training it or just to demo the results.

The pushing robot environment is an example of how to create a bit more complicated environment. It is a robot with two free degrees of freedom that must find the box in front of it and use its end-effector to push it away from the robot. The observations are the angle and speed of the robot joints, the world position of the tool and the relative position between tool and box. The action is what torque to apply to each joint at each timestep. The reward is distance between the current position of the box and its starting position.

60.2.3. Shovel terrain environment¶

Run the example with python data/python/RL/run_env.py --env agx-shovel-terrain-v0. We do not ship a pre-trained example of this environment.

This environment is a shovel constrained to the world with three degrees of freedom. It can move forward, backward and tilt the bucket. The goal is to fill the bucket by digging in the deformable terrain.

60.2.4. Wheel loader terrain environment¶

Run the example with python data/python/RL/run_env.py --env agx-wheel-loader-terrain-v0. We do not ship a pre-trained example of this environment.

This environment is a wheel loader with a pile of deformable terrain in front of it. The goal is to fill the bucket by digging in the deformable terrain.