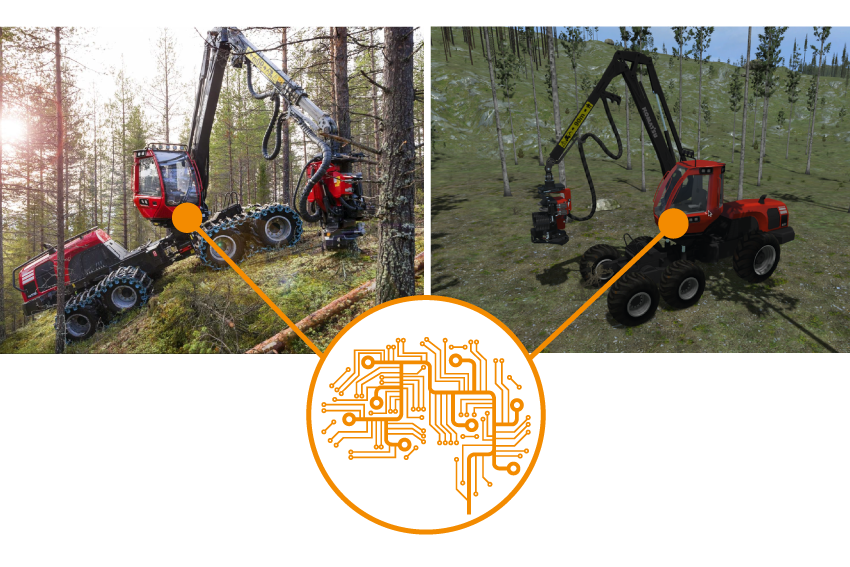

Despite all the automation efforts we see today, forestry machines are still mainly manually operated. The complex dynamics of the machines combined with the unstructured, rough terrain they operate in makes it really difficult to automate. In this project, we wanted to use machine learning to train an AI to autonomously pick up a randomly placed tree log using the crane and gripper of the forest machine.

Forest machine operators spend more than 80% of their active time controlling the forestry crane, and theoretically a lot of time can be saved with an effective autonomous control system. In this project, we have focused on automating the task of grasping a log with the crane on a forwarder using reinforcement learning.

It is not possible to use a real forestry machine to train a neural network for this task. It would be too dangerous, time-consuming, expensive, and would most likely also ruin the machine. So, this has to be done in a simulated environment. But for the results to be transferable to a real machine, the simulation has to be realistic with accurate dynamics, forces, friction, and energy.

AGX Dynamics for Unity together with ML-Agents was used for the training environment. Each training session ran for at least 35 million time steps (∼ 200k episodes) in eight parallel environments. The observation space consisted of the log position, and the current state of each actuator in terms of angle, speed and applied motor torque. The agent controlled the target speed of each motor, and the policies were parametrized by a feedforward neural network and optimized using Proximal Policy Optimization (PPO). The network had three fully-connected hidden layers each comprised of 256 neurons.

Using curriculum learning to solve the sparse reward problem, we were able to get very high success rates. The best control policy reached a grasping success rate of 97%. By including energy into the reward function we were able to achieve a 60% reduction in the total energy consumption, while still maintaining a high success rate. This also gave substantially less jitter and smoother motion compared to control policies without incentive for energy optimization. These results are important, as jerkiness poses a particular challenge to robotic control and efficient automation. The best energy-optimized policy reached a grasping success rate of 93%.

Achieving these results is only possible with a physics simulation, as with AGX Dynamics, that can accurately calculate the energy in the system.

The project was conducted as a master thesis work by Jennifer Andersson, Uppsala University, together with Algoryx and further developed for a scientific paper in cooperation with Dr Martin Servins group at Umeå University.

Further information and preprint: Reinforcement Learning Control of a Forestry Crane Manipulator by J. Andersson, K. Bodin, D. Lindmark, M. Servin, and E. Wallin.

Please don’t hesitate to contact us if you want to run a similar AI-project, want to know more about the project, or if you want to apply for a free trial of AGX Dynamics for Unity.